Dynamic Cache for Computing with High-Performance

Introduction

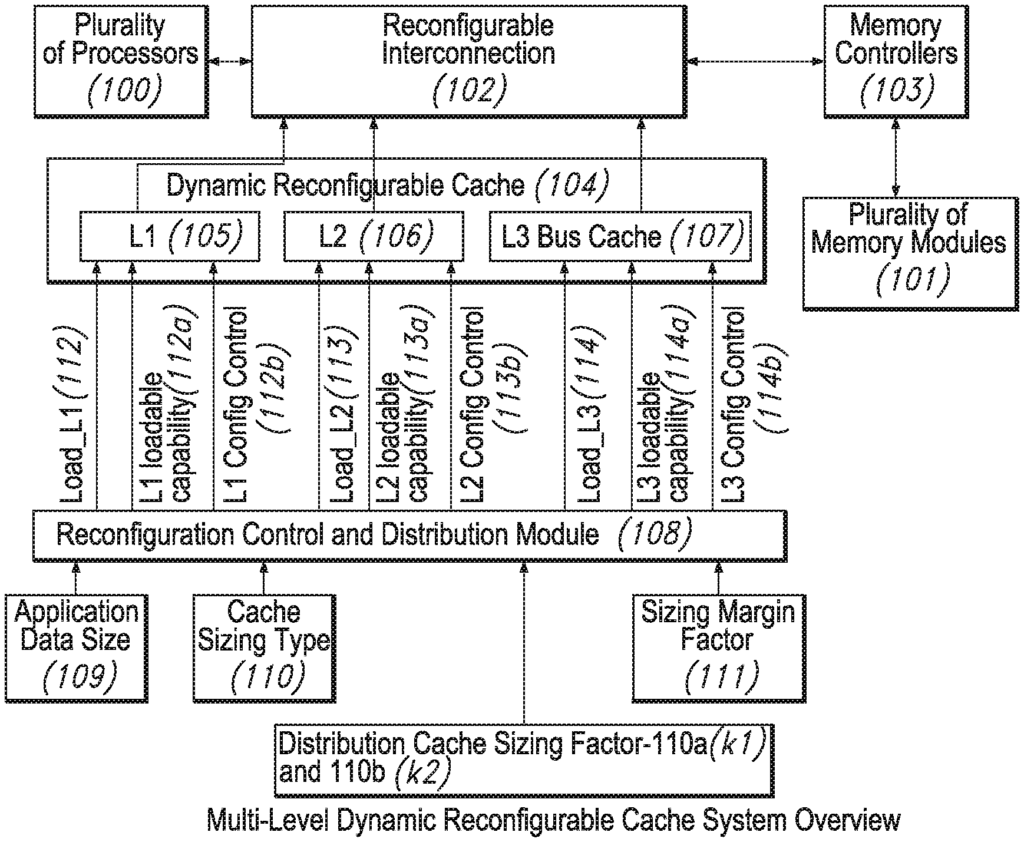

This dynamic, reconfigurable multi-level cache technology is designed to enhance the performance of multi-purpose and heterogeneous computing architectures. By dynamically adapting to the system’s current workload, this cache system ensures optimized data retrieval, reduces latency, and increases overall computational efficiency. This technology is particularly valuable for companies involved in computing, AI, semiconductor design, and cloud services, offering a solution that meets the increasing demand for faster, more efficient computing systems.

The Challenge: Handling Diverse Computing Workloads

In today’s computing landscape, systems must handle a variety of workloads, from AI computations to data-heavy tasks, while maintaining high performance and energy efficiency. Traditional static cache designs are often insufficient to manage the demands of heterogeneous computing systems, leading to bottlenecks, increased power consumption, and reduced performance. As the complexity of data processing grows, the need for adaptable cache systems that can dynamically adjust to different workloads becomes critical.

Dynamic Cache for Efficient Processing

This dynamic, reconfigurable cache system solves these issues by automatically adjusting the cache structure and size based on real-time workload demands. This flexibility improves data access speed, minimizes latency, and optimizes power usage, resulting in a more efficient computing architecture. The technology is ideal for applications ranging from high-performance computing to AI and cloud-based services, where varying workloads require quick adaptability without sacrificing performance.

Key Benefits for Computing and AI Industries

For semiconductor companies, this technology provides a way to improve processor designs by integrating dynamic caching capabilities that enhance multi-core processing performance. AI and machine learning companies can leverage this system to accelerate data processing and improve algorithm efficiency, leading to faster results and better system optimization. Cloud service providers will find value in the cache’s ability to optimize performance across diverse virtualized environments, ensuring consistent and reliable service for users.

Investing in Future-Ready Computing

Licensing this dynamic cache for computing technology positions your company at the forefront of innovation in high-performance computing and data processing. By offering a flexible, reconfigurable cache system, your business can deliver next-generation processing solutions that meet the demands of modern computing architectures. This technology ensures faster, more efficient computing performance, reducing latency and improving energy efficiency across a range of applications.

- Abstract

- Claims

We claim:

1. A system for dynamic reconfiguration of cache, the system comprising:

9. A computer-implemented method for reconfiguration of a multi-level cache memory, the method comprising:

16. The method as claimed in claim 9 further comprising:

17. The method as claimed in claim 16 further comprises:

18. A system for dynamic reconfiguration of cache, the system comprising:

20. The system as claimed in claim 18, further comprising:

Share

Title

Dynamic reconfigurable multi-level cache for multi-purpose and heterogeneous computing architectures

Inventor(s)

Khalid ABED, Tirumale RAMESH

Assignee(s)

Jackson State University

Patent #

11372758

Patent Date

June 28, 2022